Contents

Synopsis

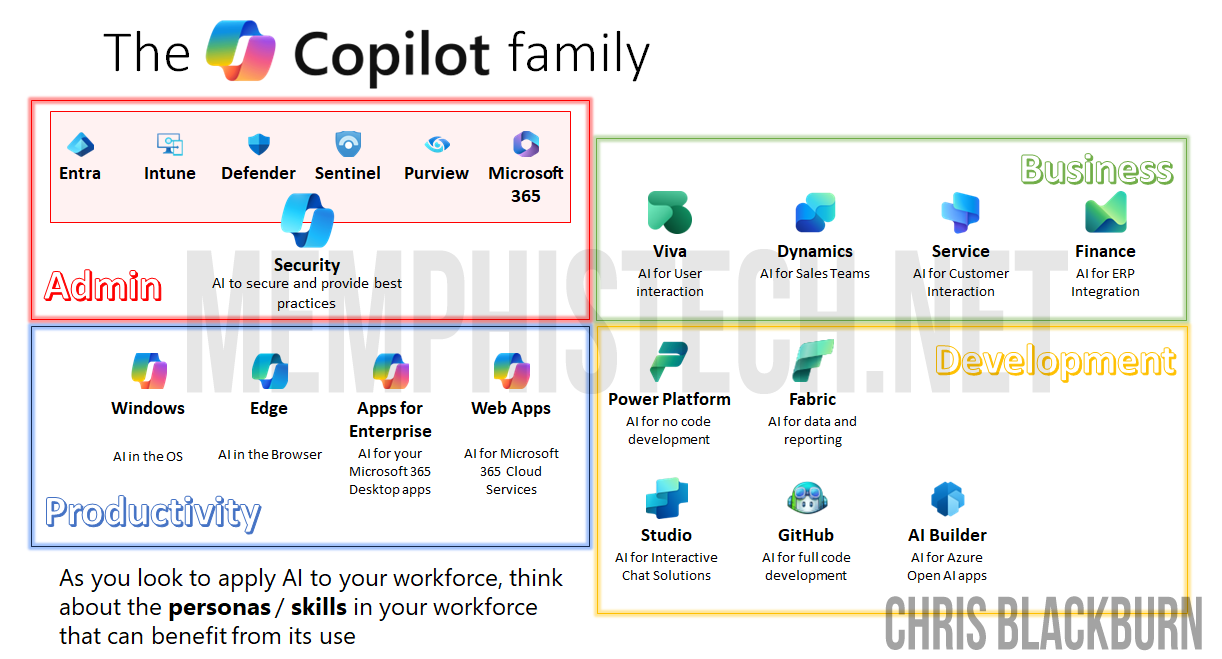

• As AI becomes deeply integrated into workplace productivity, understanding and governing its usage is critical for IT leaders.

• Learn how to explore native Microsoft 365 capabilities to monitor, analyze, and secure AI usage—especially Microsoft 365 Copilot and other generative AI tools.

• Learn how to leverage built-in dashboards, audit logs, and compliance tools to gain actionable insights, ensure responsible AI adoption, and align with organizational governance policies.

Why Monitor AI Usage?

Rise of generative AI in productivity tools (e.g., Microsoft 365 Copilot)

• AIPRM: A survey found that three-quarters (75%) of surveyed workers were using AI in the workplace in 2024, with nearly half (46%) beginning to do so within the last six months.

Additionally, 75% of companies said they were looking to adapt to AI within the next five years in 2023

• Forbes: The AI market size is expected to reach $1,339 billion by 2030, experiencing substantial growth from its estimated $214 billion revenue

in 2024. THEY STATED AI expected to contribute a significant 21% net increase to the United States GDP by 2030

• U.S. Bureau of Labor Statistics: The employment of software developers is projected to increase by 17.9% between 2023 and 2033, much faster than the average for all occupations (4.0%)

AI Is Everywhere – And it Can Be Scary!

• Cybernews: A survey by Cybernews shows that 84% of AI tools leaked data, with nearly 90% experiencing breaches according to a new report

• Stanford’s 2025 AI Index Report: The report lists the 15 biggest risks of AI, including lack of transparency, bias and discrimination, privacy concerns, ethical dilemmas, and security risks

• Secureframe: AN article lists the 15 biggest risks of AI, including lack of transparency, bias and discrimination, privacy concerns, ethical dilemmas, and security risks

AI & Threats

The rapid adoption of AI technologies is reshaping the threat landscape by enabling attackers to create new attacks, such as deepfakes, and augment existing social engineering attacks.

It is also creating new exposures for organizations due to AI adoption and custom AI-application creation. Gartner in their annual ThreatScape analysis shows a wide number of threats that continue

AI & Transparency

By being transparent about how AI systems operate, organizations can foster a culture of trust and responsibility, ultimately leading to more successful and ethical AI deployments.

• Building Trust:

• Transparency helps build trust among stakeholders, including employees, customers, and regulators. When AI systems are transparent, stakeholders can understand how decisions are made, which fosters confidence in the technology.

• Compliance and Governance:

• Transparency is critical for ensuring compliance with legal and regulatory requirements. It helps organizations demonstrate that their AI systems are operating within the bounds of the law and adhering to ethical standards.

• Mitigating Risks:

• Transparent AI systems allow organizations to identify and mitigate potential risks, such as bias, discrimination, and privacy concerns. By understanding how AI systems work, organizations can take proactive measures to address these issues.

• Enhancing Accountability:

• Transparency ensures that AI systems are accountable for their actions. This means that if something goes wrong, it is easier to trace the source of the problem and take corrective action.

What Tools Can I Use?

Working Better Together

Microsoft 365 Admin Center: Readiness & Reporting

Understanding how Microsoft tools providing reporting and control user access and application usage are managed by centralized controls to ensure security, compliance, data protection, and effective management within an organization using not only Microsoft 365 Copilot but any AI tool.

Copilot Control Center: tenant level controls for Copilot

Some of the common controls you can find from the Copilot Control Center

⚙️ Configurable Scenarios

Directly Configurable in Admin Center:

- Copilot Diagnostics Logs: Submit feedback logs on behalf of users.

- Copilot Image Generation: Enable/disable image creation features.

- Copilot in Edge: Configure via Edge configuration profiles.

- Copilot in Viva: Manage writing assistance and engagement features.

Managed via Shortcuts to Other Admin Centers:

- Copilot in Teams Meetings: Managed in Teams Admin Center.

- Copilot in Power Platform & Dynamics 365: Managed in Power Platform Admin Center.

- Copilot Chat Consumption Meter: Linked to Power Platform billing plans.

- Copilot in Bing, Edge, and Windows: Informational only; not configurable[1].

Agent Management (via Agents & Connectors)

Admins can:

- View & Manage Agent Inventory: Includes metadata like capabilities, knowledge sources, and custom actions.

- Deploy, Block, or Remove Agents: Control availability and access.

- Assign Agents to Users/Groups: Granular access control.

- Review & Approve Agent Requests: Streamlined publishing process for custom agents built in Copilot Studio.

- Enable/Disable Extensibility: Control who can use agents across the organization[2].

Cost & Billing Controls

- Billing Policies: Define departmental budgets and spending limits.

- Alerts: Set thresholds for usage and receive notifications.

- Azure Subscription Integration: Link billing plans to subscriptions for pay-as-you-go services[3].

Copilot Readiness Reports: license eligibility and deployment status

The Copilot Control System Overview blade provides a high-level snapshot of usage by product…..

While the Microsoft 365 Copilot Usage Reports enable you to export all usage data and investigate which apps are the most popular with Copilot use and where to increate communication campaigns on others.

Built in feedback collection allows users to submit their feedback on Copilot, along with occasional surveys insights to help you gauge usability and satisfaction

Viva Insights: Rich Copilot Analytics

Licensing: Hitting the Threshold

Activating Insights with Copilot

• A minimum of 50 assigned Viva Insights licenses, or 50 assigned Copilot licenses (including the Viva Insights service plan), is required for data processing to kick off.

• Data processing takes up to seven days following license assignment.

Here are the capabilities that Insights unlocks:

|

Total number of Copilot assigned licenses in tenant |

Viva Insights assigned license in the tenant (Yes or No) |

Availability of features in Microsoft Copilot Dashboard |

|

N/A |

Yes (at least 50) |

All features: • Readiness page • Adoption page with tenant-level and group-level metrics plus filters • Impact page with group-level metrics plus filters • Sentiment with group-level survey results |

|

50 or more |

N/A |

All features: • Readiness page • Adoption page with tenant-level and group-level metrics plus filters • Impact page with group-level metrics plus filters • Sentiment with tenant-level survey results |

|

Less than 50 |

N/A |

Limited features: • Readiness page • Adoption page with tenant-level metrics only • Sentiment with tenant-level survey results |

Copilot Dashboard: usage metrics and trends

Microsoft Viva Insights metrics at their core

When we unlock the licensed capabilities, we then start to see group level metrics

Thresholds

Microsoft Viva Insights Privacy settings can give you a little more flexibility to lower the thresholds

Advanced Insights Workbench: Power BI templates for deep dives

Measure Copilot impact using the metrics that matter most.

Business leaders can also discover key Copilot metrics and insights.

You can select from more than 100 Copilot metrics and customize filters to answer granular and specific questions.

Roles for Reports

Admins (even Global Admins) don’t see the reports by default.

Once the account is added to the Insight Analyst role in Entra you’ll see these reports.

Running Reports

Microsoft Viva Insights Advanced Analytics reports will need to be scheduled and run so you get your data and not MS canned data.

Viva Insights Shows Value

Microsoft Viva Insights Advanced Analytics helps you effectively communicate the real value and savings Copilot brings to your end users

Defender for Cloud Apps: Next Generation Detection

Detection of AI

Security teams can use Defender for Cloud Apps to discover and efficiently manage Generative AI apps.

To help organizations quickly identify which apps are considered the “Generative AI” category helps streamline this process.

Modern App Detection

|

Traditional |

Modern |

|

Firewall logs Stale reports Admin monitoring and response Integrating Microsoft Defender

|

Onboard your devices with Defender for Endpoint Supported across Windows / macOS / virtualized / Cloud environments Automatic detection Shadow IT and blocking of unsanctioned apps

|

Integrating Microsoft Defender

Under Endpoint General settings, enable Microsoft Defender for Cloud Apps Integration

Under Cloud Apps settings, under Cloud Discovery / Microsoft Defender for Endpoint, check Enforce app Access

Enforcement

Cloud App Policies

Create 2 Cloud app policies

One that automatically block risky Generative AI apps (under a score of 5)

One that automatically sets the others to Monitored for low risk apps (anything above a 6)

User Notifications

Create 3 pages in your Sharepoint environment:

- One that defines your Cloud App policies

- One with verbiage for apps are “Monitored” (notification URL), and links to #1

- One with verbiage for apps are “Unsanctioned” (blocked URL), and links to #1

Add these to your User notification URLs

Reporting

The Cloud Discovery Generative AI category will report on over 400+ known applications. Find devices, users, bandwidth used, etc

Microsoft Purview: Compliance & Security for AI

Audit Log Search

Purview provides all of the Audit log searching across the Microsoft 365 environment, and you can dig deep into the Copilot activities and workloads

DSPM for AI

DSPM for AI helps you discover, monitor and secure all AI activity in Microsoft Copilot, agents, and other AI apps to keep your data safe.

• Activate Microsoft Purview Audit to get insights into user interactions with Microsoft Copilot experiences and agents.

• Onboard devices to Microsoft Purview to protect sensitive data from leaking to other AI apps.

• Install Microsoft Purview browser extension to detect risky user activity and get insights into user interactions with other AI apps.

• Follow Guided Recommendations to get started in creating policies for detecting activity in your tenant

• Extend your insights for data discovery to discover sensitive data in user interactions with other AI apps.

Lets look at 2 important onboarding methods that you’ll want to target to your endpoints.

Onboarding

|

Good |

Better |

|

Onboard your devices with Defender for Endpoint DLP Supported across Windows / macOS / virtualized / Cloud environments |

Use Intune to push the Purview extension to supported browsers · Microsoft Purview Extension – Chrome Web Store (google.com) · Edge has native capabilities |

Guided Recommendations

Get step-by-step help enabling best practice settings around AI

Some features will require changing to Purview Enterprise by connecting an Azure subscription. You can find this under Settings and Account, where you can upgrade your account by defining an Azure resource group to store log data

Apps and Agents

Microsoft Purview protection for AI applications and agents dashboard shows the depth and breadth of core app use over the last 30 days.

Activity Reporting

DSPM for AI reports show interactions within core Microsoft AI platforms

DSPM for AI reports show interactions with popular AI platforms (Copilot, ChatGPT, Gemini) and where there is a DLP activity match

The Next Step: Enforcement

• Insider Risk Policy: Detecting Users Accessing Generative AI Platforms

• The Insider Risk Policy is designed to detect when users within your organization attempt to browse or interact with external generative AI platforms like ChatGPT, Google Gemini, or other AI assistants

• Data Loss Prevention (DLP) Policy: Preventing Sensitive Data from Being Shared

• Data Loss Prevention Policy (DLP) serves as a safeguard against sensitive data being copied, pasted, or uploaded into external AI platforms.

• Adaptive Protection in AI Assistants

• The Adaptive Protection policy enhances security dynamically, based on user risk profiles and behaviors. It ensures more robust protection in high-risk scenarios when sensitive data is involved in interactions with generative AI platforms.

• Communication Compliance: Unethical Behavior in Copilot

• This policy focuses on ethical usage of AI-powered tools like Microsoft Copilot. It ensures that employees interact responsibly with AI, preventing scenarios where unethical behavior, such as attempts to bypass data protection, occur.

Real-World Scenarios

Audit Search

Monitor logs to ensure regulatory adherence and safeguards against misuse by using Audit in Purview and Configure search using the

Activities – friendly names

Interacted with Copilot

Workload

Copilot

Decent mechanism but Communication Compliance provides more detail

AI App Usage

The Cloud Discovery Generative AI category will report on over 400+ known applications. Find devices, users, bandwidth used, etc

In-Depth AI Analytics

The Apps and Agents blade in DSPM for AI provides granular details around Copilot experiences & agents

Monitor Interactions

Monitor AI interactions helps detect any sensitive information that could have been shared via DSPM for AI in Purview by enabling the policy to Detect risk interactions in AI apps and Detect sensitive info added to AI sites and regularly review interactions in other AI apps for sensitive content.

Risky Communication

Detect and flag risky AI communication, ensure compliance, prevent leaks, and maintain a safe workplace via Communication Compliance in Purview & Create a policy with Copilot as location to generate a report that will be visible under Policies for further review.

Block AI in Apps

Restrict high-risk users, ensuring security without hindering low-risk productivity thru Data Loss Prevention within Microsoft Purview & Create a policy targeting Teams chat and channel messages with sensitive information.

AI Interaction Retention

Retain relevant AI activity OR delete non-relevant user actions sooner thru Data Lifecycle Management within Purview and Create a Retention policy by toggling Team chats and Microsoft 365 Copilot interactions and target groups, meetings, and chats for content.

Best Practices

AI Principles for the Enterprise

• Laws & Transparency: AI use must comply with laws, be transparent, and clearly labeled as AI-generated.

• Fairness: Ensure fairness and avoid discrimination when using AI tools.

• Training data: Data should be unbiased and relevant.

• Human oversight: Oversight is required; do not fully automate business processes with AI.

• Monitoring: AI systems must be regularly monitored for accuracy and alignment with company goals.

• Ethics: AI must be used ethically and responsibly to further company objectives.

AI Considerations

• Respect intellectual property rights: Ensure the outputs do not infringe on third-party copyrights or patents, and be aware that AI-generated content may not always be protected by copyright.

• Safeguard confidentiality: Never input sensitive or confidential information into AI tools unless the tool has been specifically approved for that purpose, and always follow organizational data protection standards.

• Verify accuracy: AI-generated content can include errors or fabricated information, so all outputs must be carefully reviewed by a human for correctness and clearly labeled as AI-generated.

Responsible AI Use

• AI as a starting point: Treat AI-generated content as a draft; always apply human judgment and creativity before considering it final.

• Proofreading required: Always review AI-created content for errors, clarity, and suitability before sharing or publishing.

• Fact-check information: Verify accuracy, sources, and evidence for all statements in AI content.

• Adhere to policies: Ensure all AI use follows applicable organizational guidelines and policies.

• Report concerns: Raise any issues or potential policy violations to appropriate contacts or through established reporting channels.

• Stay updated: Regularly review and follow the latest guidelines related to AI use, and ask questions if unsure

Monitoring AI – Reports

• M365 > Copilot Control System Overview

• M365 > Microsoft 365 Copilot Usage Reports

• M365 > NPS Surveys insights

• Viva Insights > Reports

• Viva Insights > Advanced Analytics

• Defender > Cloud Discovery Report

• Purview > DSPM for AI Reports

• Purview > Agents & Experiences

• Purview > Audit